By Ron Vale, Tony Hyman, and Jessica Polka

Summary

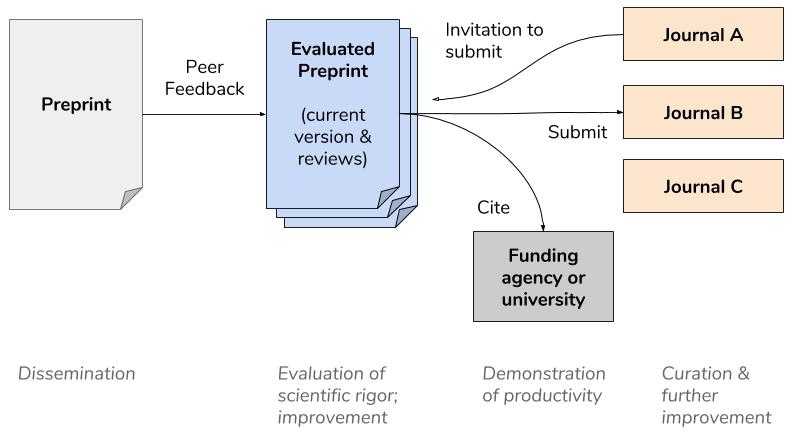

We propose the creation of a scientist-driven, journal-agnostic peer review service that produces an “Evaluated Preprint” and facilitates subsequent publication in a journal.

Introduction

Scientists have a love-hate relationship with peer review. Sadly, this relationship has been drifting towards the latter over time. Much of the problem with peer review, as it currently stands, is its dual function in: 1) technical evaluation of data and improving the quality, interpretation, and presentation of a scientific work, and 2) impact evaluation, helping editors assess where the papers stands in the field and its suitability for the journal. We feel that recently peer review has become consumed with this latter journal “gatekeeping” function. As a symptom of this trend, it is not uncommon for authors to receive short reviews proclaiming that the study is “more suitable for specialized journal” or requesting a list of experiments to “raise its impact.”

The time has come to disentangle the two roles of peer review. Peer Feedback is a proposed scientist-driven service that tackles the first issue by providing high quality reviews that help scientists to improve their work and expedite subsequent publication.

How would Peer Feedback work?

In brief, Peer Feedback could work as follows, although we look to the scientific community now for input on its merit and how best to refine its operation.

- Scientists submit their work to a preprint server or Peer Feedback and request evaluation. The authors suggest a list of possible referees from an extensive Peer Feedback Review Board, or outside referees if their discipline is not well-represented on the Board. Upon receipt, submissions are subjected to an initial screening process that checks for adherence to relevant publishing guidelines such as plagiarism. Peer Feedback will select and contact referees, offering them the opportunity to review the paper. When two of them agree to review, they are given two weeks to complete their reports using a template that focuses on constructive technical evaluation After this period, reviewers then have another week to consult with one another online, adopting a general strategy implemented by eLife and EMBO J. Importantly, reviewers are encouraged to engage junior colleagues, such as postdocs, in review, recognizing the respective strengths that senior and junior reviewers bring to evaluation. All reviewers will receive credit for their contribution in a database, which can be used as evidence of public service. A template for peer review will be created to guide the evaluation, and direct manuscript annotation also will be explored.

- After constructive reviews are obtained, authors (or institutions or funders) pay for Peer Feedback. Reviewers are paid for services, recognizing their work in providing high quality review. However, in lieu of payment, referees are encouraged to receive credits for using the system as authors, or donate their credits to scientists from under-resourced institutions. Overall, this system creates a transparent fee structure and promotes timely performance by the referees. Initially, ASAPbio plans to cover some of the cost for authors in order to increase participation. The default will be an “open pipeline” (review reports and the revised manuscript are assigned DOIs and publicly disclosed, regardless of whether the reviewer signs them).

- After Peer Feedback, the authors can respond to the referees and revise their work. We also will explore the possibility of online author-referee consultation during a defined revision period. The revised paper, the reviews, and author response will be linked together to form an “Evaluated Preprint,” which provides several choices for authors:

- The Evaluated Preprint can be included as evidence of productivity and rigor in grants, job applications, and promotions, providing added value beyond a preprint.

- The Evaluated Preprint could, in some cases, become a final product. The research community would have open access to the work and the reviews, potentially in partnership with a publication platform.

- The Evaluated Preprint could be used to facilitate journal matchmaking and streamline publication. We envision a number of journals partnering with Peer Feedback. For authors who opt-in, a “Dashboard” available to journal editors would allow participating journals to view the Evaluated Preprint, journal submission status, and reviewer identity, if not public. Through this Dashboard, partnering journals could invite an Evaluated Preprint for submission and indicate whether they will accept it in its revised form or request additional experiments or peer review. The former scenarios would ideally result in a lower publication cost to the author.

- The author may decide to submit to another journal that is not part of the Peer Feedback journal network. Even in this case, the author presumably comes in with a stronger submission and a greater chance of acceptance. The journal editor also may use the Evaluated Preprint in order to better assess whether the paper is a good match for the journal.

We anticipate that the majority of papers that pass through Peer Feedback will not need to be reviewed again, or could be reviewed only for journal suitability. We also hope that the system will promote better journal matching with less rejection since editors will have better information on the submission.

We propose that reviewers will be selected through partnerships with several scientific societies. The Review Board will include members of the partnering societies who voluntarily agree to participate as potential reviewers. Reviewers will receive scores on their reviews (by author, other reviewer, and community). Incorporating such data, we will create a system of referee awards that can be cited as evidence of community service. Performance will be monitored and reviewers of poor standing (poor scores and/or time to review) will be dismissed.

Who Benefits from Peer Feedback?

Science

- Supporting and strengthening the peer review system to better serve its purpose of improving the quality and credibility of scientific work

- Adding credibility to preprints and potentially increase preprint acceptance

Scientists

- Improved manuscript quality as a result of constructive feedback

- More efficient journal matchmaking reduces overall time and effort to publication

- Cultural change: peer review for improvement of work, not as a “gatekeeper”

- After peer review, a choice of where the work will be published—a flexible pipeline

- As reviewers, rewards for outstanding peer review

Funders

- A review system that focuses on scientific rigor

- Adds a rapid validation process to preprints, increasing their credibility

- Saves scientists’ time with a more efficient publication system with less journal rejection

- Promotes transparency in peer review reports

- A transparent pricing model for services (peer review) and path towards sustainability

Journals

- Additional feedback enables better-informed decisions about which work to publish or invite for submission

- Potentially reduces need for managing peer review, saving editorial time and energy and allowing for more rapid publication

- Enables journals to focus on article selection, highlighting of work, and other services

Participating Scientific Societies

- New leadership role in peer review and a seat on the governance body of Peer Feedback

- New benefits for its members (membership on the Review Board or discounts on the service)

- New opportunities for society journals to streamline publication and reduce its costs by interacting with Peer Feedback

Open Questions

Why would I send a manuscript to Peer Feedback when I can send it to my colleagues?

Indeed, Peer Feedback is a formalization of a process that some scientists already employ: getting comments from colleagues prior to sending the manuscript to a journal. However, such informal feedback among close colleagues lacks the potential rigor and impartiality of peer review and is invisible to the wider community. It therefore lacks transparency that would be valuable to journals or funding agencies. Peer Feedback not only formalizes this process but also makes it available to those without a personal connection to the experts in question.

Isn’t this just an extra layer of review, with extra time and cost to the author?

Yes, Peer Feedback incurs extra time (3 weeks) and cost (to be determined through cost modeling; expense substantially subsidized initially by ASAPbio). But rather than serve as an additional layer, we intend Peer Feedback to be integrated into the publishing system, offering value in three ways. First, currently, many papers are initially rejected from the first journal to which they are submitted (in a survey of clinical researchers, nearly half of papers are submitted to more than one journal. The average journal rejection rate is 50%, though a lower value was reported on page 29 of recent survey), a phenomenon we are working to understand more quantitatively for our specific disciplines. In many instances, authors’ expectations were unrealistic. However, often the submitted manuscript is lacking in clarity, presentation, or data interpretation. Peer Feedback will allow authors to recognize flaws and improve their work prior to a journal submission, thus making their first submission more competitive. Second, we anticipate that many journals will work with Peer Feedback because it could reduce their workload in peer review and allow them to make better informed and efficient decisions on the suitability of a paper. This potentially could result in lower costs for authors as well. A crucial aspect of a journal-agnostic review service is that it minimizes papers being reviewed multiple times, reducing the load on the community. Third, authors could use the Evaluated Preprint as further evidence of achievement for jobs, grants, and promotions prior to the eventual publication.

Will journals wish to interact with Peer Feedback?

Many scientists are likely to feel that an important value proposition of Peer Feedback is the potential to receive invitations for submission and non-redundant review at journals. An Evaluated Preprint that passes through Peer Feedback could become a rapid entry point to a journal, potentially published 1) as is, 2) with minor revisions, or 3) with additional work discussed between the journal editor and the authors. The journal would benefit from the reduced work in arranging peer review and the high probability of publication in their journal. Journals can then solicit further peer review to help them evaluate how important it is in the field—a separate problem from assessing technical merit. Peer Feedback would provide a way of ensuring that the papers are of sufficient technical merit by the time they are submitted.

Will scientists want to review for Peer Feedback?

A large group of respected scientists will be needed to create a Review Board for Peer Feedback. While most scientists feel overburdened by peer review as it is, we believe that this model of peer review may attract many people, a belief we will interrogate with upcoming market research. In addition, certain scientists will be motivated by the focus on data and data presentation, rather than journal suitability and impact. Reviewers also will be compensated if they choose, recognizing the work and service involved. Finally, recognition for constructive reviewing will be made public, which could be valuable for promotions, etc. Importantly, because being included on the Review Board for Peer Feedback will be voluntary, we anticipate that it will self-select for referees who want to perform the service of timely, high-quality reviewing for authors.

Conclusion

Peer Feedback picks up where preprints leave off: a scientist-driven system for journal-agnostic peer review. Peer Feedback could, over time, overcome some of the inefficiencies inherent in the cycles of journal rejection that take place today. Perhaps more importantly, it fosters a badly needed cultural change in peer review, deconvolving its “gatekeeping” function from its role in establishing scientific credibility and improving the work of the authors. Indeed, an audacious goal of Peer Feedback is to set new standards in the quality of peer review and its utility to science overall.

Peer Feedback does not address the problem of how to rank and evaluate the worth of scientific studies; most scientists (even those participating in Peer Feedback) may still strive to publish in elite journals. However, we feel that disclosure (preprints) and validation through peer review (Peer Feedback) are immediately tractable steps that could gain rapid acceptance in the community and may lead to additional innovations in the future.

Many will be skeptical of Peer Feedback, which is fair and expected for any new effort in publication. However, many also have argued that preprints would never be viable in the life sciences, claiming that the culture of life science is “too different” from that of physics. While preprints in the life sciences have a long way to go, the remarkable acceptance of preprints by funding agencies, scientists, and journals in the past two years indicates that change is indeed possible. With Peer Feedback, however, we would need to acknowledge that a scientist-driven peer review system would be a bold and ambitious experiment. We anticipate building slowly, most likely receiving a few hundred papers in the first year or two and building to a few thousand afterwards, fine-tuning the system along the way by receiving input from the scientific community and other stakeholders. To maximize its chance of success, credibility is absolutely essential. Peer Feedback would benefit from the credibility derived from partnerships with prestigious scientific societies that would be willing to engage their membership and innovate around their journals. More importantly, a pioneering group of outstanding scientists, both junior and senior, would need to say, “Yes, I am willing to try Peer Feedback.”

Would you be willing to try Peer Feedback, either as an author or referee? What are your hopes? What are your concerns? Please fill out the survey below, leave a comment below, and feel free to email further thoughts to jessica.polka at asapbio.org—we would love to hear from you! Please share this blog and survey with your colleagues through social media so that we can integrate broad feedback.

hello.

It looks like a lot the Peer Community In project and its first declination Peer community in Evol Biol. Cf http://www.peercommunityin.org and evolbiol.peercommunityin.org. Hope it’ll work

The initiative seems to me to be a step in the right direction. Decoupling evaluation from publication is necessary because the economic models behind are totally different.

First, evaluation by peers is part of the researchers’ job. The time spent on this work by researchers is already paid for by universities and research institutes through the salaries of researchers and the necessary web infrastructure is inexpensive. Thus, I am not in favour of paying referees

Second, publication of nice articles, well formatted, proof edited, and publicized and sent to media may cost money. But this should be left optional for the authors because this is not strictly a scientific matter.

This is what Peer Community in – https://peercommunityin.org/ – proposes : evaluation and validation of preprints by peers and no need to ultimately (but leaving this option open) publish the preprint in a journal.

This PCI service is completely free for authors and readers alike.

I’ve enjoyed reading the contributions to this discussion on peer review so far and agree that there is much that we could do to improve the way that journals support the scientific enterprise and the important role(s) that peer review plays.

One consistent theme is that we need to disentangle the various roles that journals have, for example in:

* communicating new findings effectively

* providing quality control to help improve reliability and reproducibility

* evaluating the potential importance of the work

* evaluating the researchers who are responsible for the work

The ideas for breaking these functions apart look interesting and many are already in action, via the use of preprints (bioRxiv), peer review focusing on technical aspects (PLOS ONE and the similar ‘journals’), and combinations of the two like F1000 Research. As several others have said, however, breaking the link between journal publication and the evaluation of researchers is no doubt the hardest challenge of all.

I would just like to emphasise the importance of the community of people involved in all this. One of the other key functions of a journal is to act as a focal point for a community of people who can pretty much be relied on to do a first-rate job in the editorial and peer review process. I’ve seen this time and again in my career in publishing. It begins with the editors at the heart of the journal and extends out to the advisory board and the entire network of reviewers who are called on to help assess the submissions.

Another side to this issue is about getting real buy-in to an alternative approach. At eLife, where I work now, this has been a challenge (as it has in other initiatives I’ve been involved in). The goals of our process are a bit different from the usual approach, and it takes a lot of attention and effort to help people not to revert to ‘normal’ behaviour. So another challenge that any new initiative will face is getting that message across – making sure that reviewers really understand what’s expected, especially if the goal is to separate technical from impact evaluation. In general, there’s a huge amount to be gained from increased training and mentoring in peer review, and perhaps new projects like APPRAISE and PEER FEEDBACK provide an ideal opportunity to do just that. This could help to increase the population of prospective reviewers too.

Thank you for a great set of ideas. One reason for reviewers not being as keen to review as we want is that they don’t get much credit for it, certainly not nearly as much credit as they will get by writing an original paper.

If we had a system whereby those reviewers got academic credit or recognition if they contribute significantly in peer review, then perhaps peer review would be less of a chore. And no payment would be necessary for the review.

But of course the recognition system is in the hands of funders and research institutions!

Interesting proposal – I look forward to further discussion at the meeting. For now, a couple of questions that have occurred to me:

Might you need to police this (especially if offered free to authors) to prevent some using it as a proof-reading service?

Have you had any discussions with publishers and any indication they might be prepared to reduce APCs for Evaluated Preprints given that a lot of the leg-work of peer review has already been done. If the big players don’t budge on APCs, this could seriously undermine the project.

Lastly, given the important of disciplinary communities, mentioned my Mark Patterson (but also evident in PeerJ’s recent announcement that it wants to create such communities within its megajournal), might there be a problem recruiting reviewers who are already dedicated to their discipline or society journals?

It would obviously be good to decouple peer review from gatekeeping. Whether an article is suitable for a given journal is a question for the editor, not for peer reviewers.

I fail to see why you insist that peer reviewers should be paid. This is unnecessary, as peer reviewers are already used to working for free, and will get even more incentive to do it in an open system. You do not even mention ‘getting extra revenue’ in the benefits of your system to scientists. On the other hand, the drawbacks of paying reviewers are severe, starting with favouring big established publishers over new, lean organisations. (Including the journals that are free to both authors and readers, and survive on modest institutional subsidies.)