Blog post by Daniela Saderi and Sarah Greaves

In May 2020 a group of publishers and organisations made a direct call in response to the urgent need to openly and rapidly share and review COVID-19 research. More than 2,000 reviewers signed up: their details were shared with the journals or platforms involved, and they were encouraged to provide ‘preprint reviews’ using PREreview. The reviewers engaged with preprints but editors did not necessarily embrace the reviews. Here we aim to understand why and what needs to change to help close the loop between crowdsourced preprint and journal-organized peer review.

In response to the pressure within academic publishing, especially peer review, driven by the COVID-19 pandemic, a group of publishing organisations called on the research community to work together (“COVID-19 Publishers’ Open Letter of Intent – Rapid Review”, ‘COVID-RR’). The community responded quickly, with 2,179 researchers signed up to a volunteer rapid reviewer pool. All were committed to quickly reviewing COVID-19 manuscripts, to have their reports shared between publishers if manuscripts were transferred after peer review and for those reports to be openly published if the journal to which their report was transferred operated an open peer review model.

PREreview was part of the COVID-RR initiative. As one of the early signatories of the letter, the organisation committed to working with publishers and researchers in the group through a dedicated preprint working group (which ran alongside working groups on data deposition and manuscript transfers) to design and develop a workflow to enable their community reviews of COVID-related preprints to contribute more directly to journal-organized peer review.

PREreview hosts a journal-independent open-source platform that enables researchers with an ORCID ID to openly review preprints that are hosted on different preprint servers. One of the outputs from the site is the rapid PREreview, a review form consisting of a series of 12 yes/no/not sure/n.a. questions designed to capture the essence of the preprint and provide an aggregated view of the collective community feedback (an example of aggregated visualization of 5 rapid PREreviews entries on one preprint can be found here).

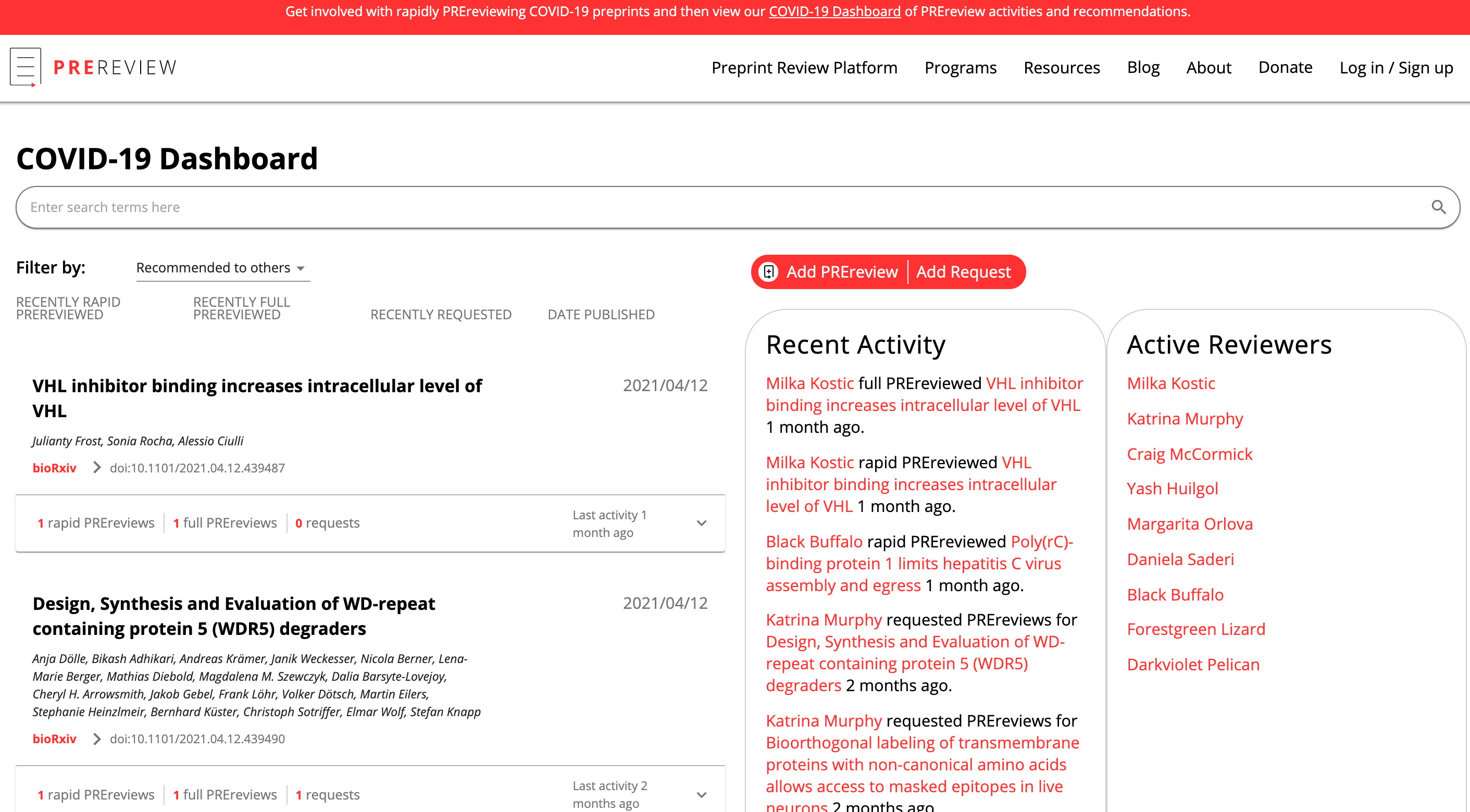

One of the first efforts of the COVID-RR initiative’s preprint working group was to develop a prototype COVID-19 dashboard. This had two main aims. The first was to provide a dedicated page that listed all the COVID-19 relevant preprints that had been flagged by the PREreview community for community review. To further speed up the review of these preprints, the volunteers who had signed up to the COVID-RR initiative to be rapid reviewers were then contacted via email and invited to review the COVID-19 preprints directly on the platform. This led to a temporary increase in preprint reviews posted on the platform.

The second aim of the dashboard was to ‘close the loop’ and enable any COVID-19-related preprint with a community review to be filtered based on recommendations that editors might find useful in selecting papers for journal-organised review. The search criteria included whether the preprint had specifically been recommended by a community reviewer for more formal peer review at a journal, and if the preprint had made the data and/or code in the manuscript available. Editors from journals involved in the initiative were then encouraged to view these reports and use them to inform their peer review process.

To better understand if and how preprint reviews from the community may help make the journal-organized peer review process faster and more efficient, we asked editors from the journals involved in the initiative to fill out a short survey.

The survey was open for about one month and received a limited number of responses (31) which were primarily driven from editors at the Royal Society and Hindawi. The survey was anonymous with the option to leave contact details should the participant be willing to be contacted for more information. Below we summarize the results and discuss our interpretation in the context of the initiative.

Summary survey results

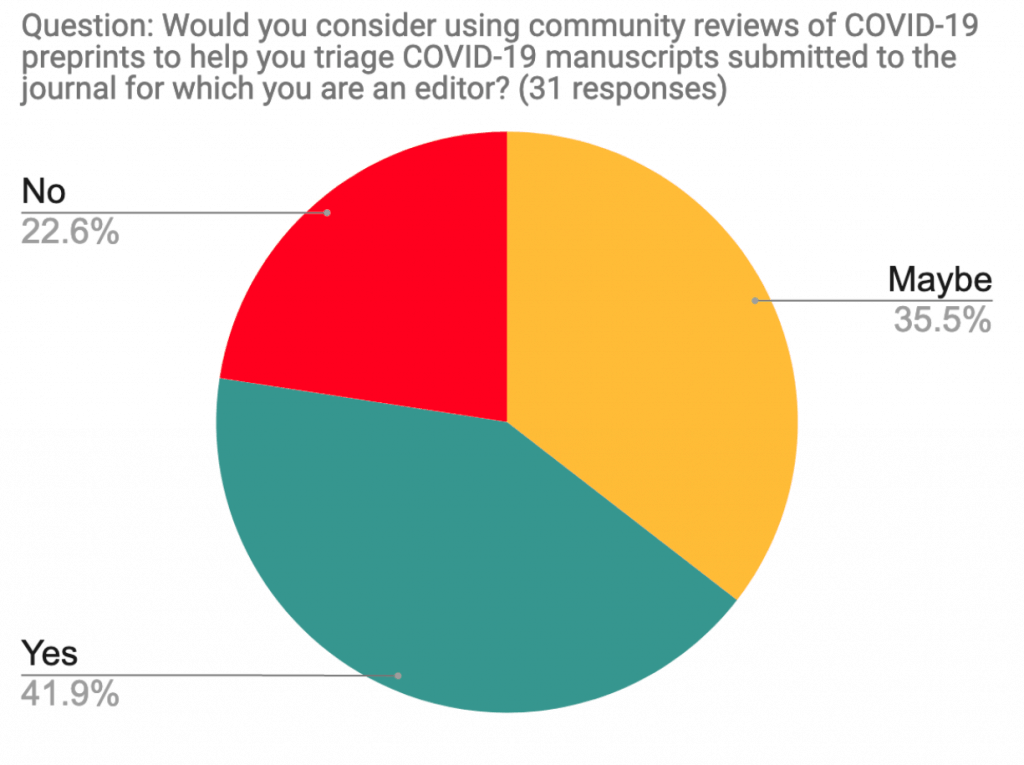

When asked if editors would use community reviews of preprints to triage submissions, over 40% of the respondents said ‘yes’ and another 35% replied ‘maybe’—which shows the desire to help relieve pressure within the peer review process.

When prompted to share their goals, if any, in connecting the community preprint review’s and journal-organized peer review’s workflows, some editors highlighted how preprint reviews could be used to triage incoming manuscripts, helping them identify those worth considering for further review.

“Community reviews might provide insights into the novelty and importance of the study. They could also flag issues relating to the analysis and interpretation of the data that would be helpful before deciding to have the manuscript reviewed.”

[Community reviews might provide a way to] “first filter for subjects which are on the edge of my expertise.”

However, in answering the same question, a few expressed concerns related to the level of trust they would be able to put into the preprint reviews and the reviewers:

“My worry is that community reviews are done by a small and non random subset of the community. I would need a way to assess their quality and representativeness.”

“I wish to be sure the same level of objectivity, thoroughness, skill and lack of bias is applied across all reviews considered.”

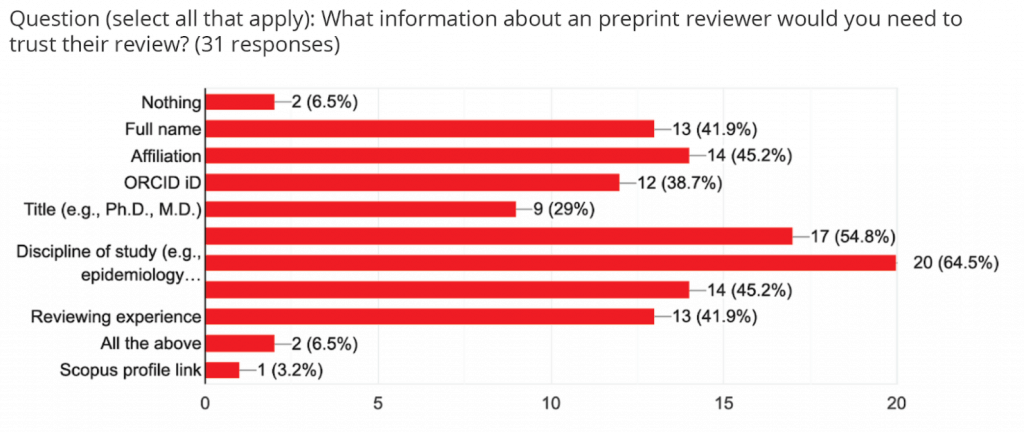

When asked to select what information about the reviewer they would need to have to trust the review, 20/31 respondents said they would need to know the discipline of study of the reviewer, 17/31 would need to know their career level, and an equal weight was put on affiliation and list of publications (14/31 respondents).

This result did not come as a surprise as over several informal conversations with editors, the PREreview team heard multiple times how editors are weary of engaging reviewers with whom they have not interacted before.

This presents a challenge to bring change to the review process. How can we even begin to change the system to address the growing crisis in peer review—to find reviewers with sufficient expertise and to minimise delays to publication? Perhaps even more importantly, how can we collectively move towards a peer review system in which the makeup of the peer reviewers pool is truly reflective of the diversity of research experts across the globe?

We believe any progress made towards an integrated workflow between community preprint reviews and journal-organized peer review will take a coordinated effort across stakeholders in the publishing ecosystem and the research community. This effort must focus on building mutual trust—on one hand, the trust by editors in the preprint review reports and on the other hand, the trust by community researchers that their contributions will be valued and recognized.

What we have learned from the small sample in this survey, is that editors’ trust in community reviews may be boosted by implementing the following:

- Ways to easily find community reviews of COVID-19 preprints for manuscripts that editors are handling for peer review;

- Ways to know the identity and expertise of the community reviewers and ability to contact them as potential reviewers for the journal.

Ultimately we believe editors will want to use previously provided comments on preprints but they must have all the relevant details on the preprint reviewers (so they can confirm subject area expertise for example) and have a seamless way to integrate those reports into the formal peer review system. The COVID-RR group aims to continue working together, and from September we will be looking at ways we can address this as a collaborative group working together for the benefit of the overall publishing ecosystem.

With thanks to Phil Hurst, Ludo Waltman and Catriona MacCallum for edits on this blog post.