Traditional peer review relies on a couple of individuals spending hours on a paper. What if the wisdom of the crowd could get it done much faster? And would this work for the public review of preprints? In order to find out, in autumn of 2021 we coordinated the crowd preprint review trial announced at the #FeedbackASAP meeting. We wanted to test if the crowd review model pioneered by the journal Synlett would be applicable to preprints, and whether it would be an engaging format for researchers to contribute to public preprint reviews.

We circulated one cell biology preprint per week to participating reviewers (‘the crowd’) and asked them to provide comments via a Hypothes.is group. The reviewers could comment on the full paper or on specific aspects according to their expertise and interest. At the end of a seven-day commenting period, we synthesized the comments received and posted them publicly as a TRIP review on bioRxiv.

The trial ran from the end of August until the middle of December and revealed some unexpected dynamics of reviewer and author participation. We are sharing the trial outcomes as well as some of our lessons learned from this project.

Trial outcomes

We had a great response to our call for participation with over 100 researchers expressing interest in the trial. 31 crowd members provided comments on at least one preprint, with many crowd members commenting on several preprints. We have posted 14 public preprint reviews; with one week’s exception, we posted reviews every week during the trial. The public reviews are available via bioRxiv and Sciety. The authors of three preprints have provided responses, which are available alongside the public reviews.

We surveyed the authors of the preprints reviewed for their experience in the trial. Out of 11 responses received, all the authors rated the feedback received as useful or very useful, and 10 indicated they would recommend it to a colleague. 10 out of 11 authors indicated they would incorporate changes to the manuscript in response to the comments received from the crowd review. We also asked authors to indicate what may have added to their experience; the main items they selected were: clear messaging that authors are not expected to incorporate or address every point in the reviews, partnerships with journals so that the author could use the crowd preprint review when submitting the paper to the journal, an opportunity to interact and discuss items with the commenters, and a synthesis of comments more focused on the main results and conclusions of the study.

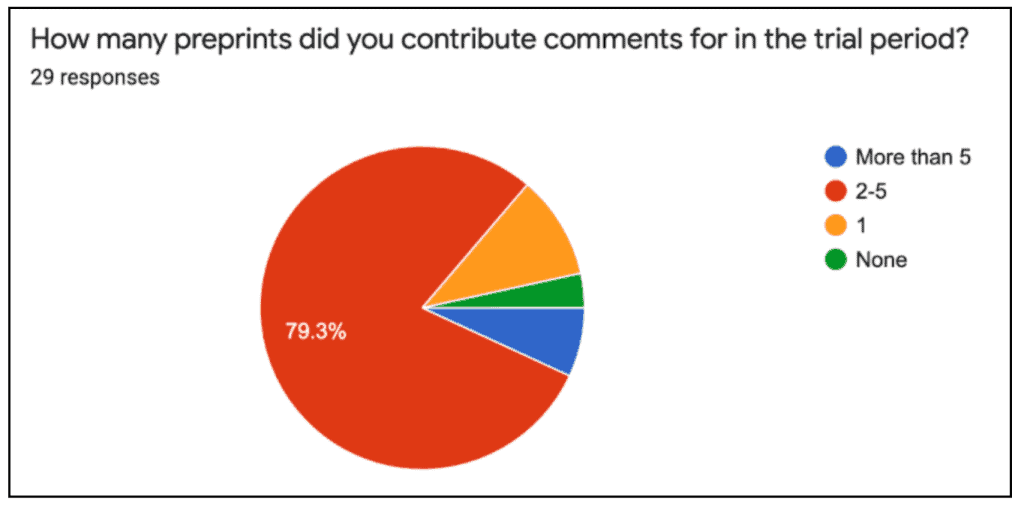

We also surveyed the crowd reviewers to learn about their views on the trial, and we received 29 responses. 25 of the respondents rated the experience in the trial as positive or very positive, and a majority (26) indicated they would be interested in participating in this type of activity in the future. 86% of respondents had provided comments on two or more preprints, which shows a good level of engagement with the commenting activities.

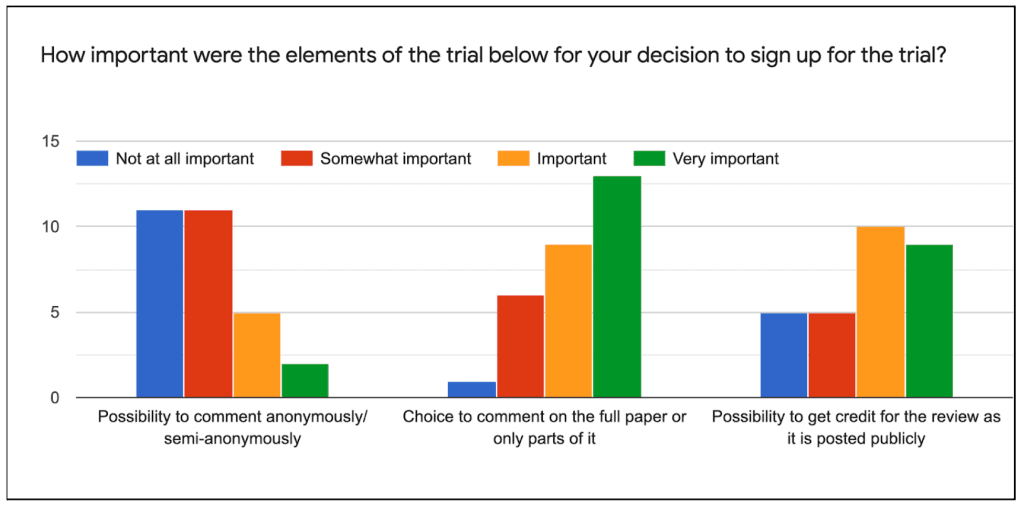

The reviewers valued the possibility to comment on both the full paper or parts of it, and also the possibility to get credit for the reviews given these are posted publicly. In additional comments, several respondents indicated that they felt the crowd review approach required less time commitment than a journal review and appreciated that this format did not involve questions on whether the paper was a fit for a particular journal.

With regard to potential improvements to the process, several reviewers suggested extending the commenting period (e.g. to two weeks) and more opportunities for commenters to discuss any points where disagreements might arise.

Additional things we learned

In addition to the trial outcomes, here are a few additional things we would highlight:

- Most of the participants in the trial were early-career researchers, many of whom expressed interest in developing review skills. There is interest in the community to contribute as a reviewer and it is important we continue to provide ways to engage early-career researchers in review activities.

- Our workflow involved approaching authors for consent to include their preprint in the trial. Only 29% of authors we approached agreed to participate; this suggests there are still cultural barriers around having commentary of research in the open. Several authors who declined indicated their paper was under review at a journal; this and the authors’ interest in partnerships with journals suggest that approaches that facilitate the reuse of the public preprint reviews may provide an incentive for authors to receive public feedback on their preprint.

- Developing the synthesis of the review based on the different comments received required some time. Some authors suggested that a more concise review with a focus on main items may have been valuable. For any future iterations of this review model, this is one step in the process that may be worth streamlining to facilitate participation by both reviewers and authors.

What next?

We are pleased that the trial allowed us to contribute to public preprint feedback and that it provided a good experience for both the crowd preprint reviewers and preprint authors. We thank all the reviewers for their many contributions and the preprint authors for their willingness to try this review model with us.

We hope that others will be inspired to try this review modality for preprints, and with this in mind, we have developed a toolkit that outlines the items to consider to set up this form of review for preprints, and resources (e.g. templates) we used as part of our trial. We invite communities and groups involved in review activities to try this review modality for preprints themselves – if you would like to learn more about how to coordinate this type of review for preprints, please do not hesitate to get in touch (iratxe.puebla@asapbio.org).

We are exploring updates to the scope and workflow for the crowd preprint review modality informed by the outcomes of the trial, and we hope to have more updates to share in 2022.

3 Comments