Organizer

Life Science Editors (Helen Pickersgill, Angela Andersen, Marie Bao, Carol Featherstone, Shawna Hiley, Sabbi Lall, Li-Kuo Su)

Website or social media links

Current stage of development

Idea

Project duration

Indefinitely

Update

How has your project changed?

We introduced an additional option to incentivize authors to initiate peer review. As an alternative to requesting traditional formal evaluation of their preprint that could potentially be used by a journal, authors can request informal guidance on how to further develop and improve the impact of their study before journal submission, to support a key reason that authors post preprints. We expect that this opportunity will encourage both preprint deposition and peer review as it provides a novel service not formally available elsewhere.

We all know that low quality peer review has damaging consequences on science and scientists. High quality peer review requires a panel of experts with relevant experience covering both technical and conceptual aspects of the paper, as well as oversight to ensure thoroughness and professionalism of each review. For submissions to journals, an editor selects appropriate reviewers and oversees the quality of the reviews. Our proposal creates this valuable peer review process for preprints by having an author-selected Liaison Team assume these responsibilities. To ensure accountability and high quality, the Liaison Team is named, and we added that they meet key criteria (see below). Ultimately, ensuring high quality peer review will effectively curate preprints by segregating strong preprints from those that do not garner sufficient interest for review. Instead of providing an overall rating of the preprint, the Liaison Team will write a non- quantitative Impact Summary , given that a single rating cannot capture all aspects. The time taken to complete reviews will be monitored to ensure a timely process and identify reliable reviewers .

Instead of providing an overall rating of the preprint, the Liaison Team will write a non- quantitative Impact Summary, given that a single rating cannot capture all aspects.

The time taken to complete reviews will be monitored to ensure a timely process and identify reliable reviewers .

Have you integrated any feedback received?

- To prevent gaming of the system we will specify criteria to avoid real and perceived conflicts of interest in the selection of Liaison Teams by authors, as is standard at scientific journals. In addition:

- The Liaison Team comprises multiple members, which should minimise individual biases.

- The Liaison Team is identified, which discourages authors from nominating close friends, and gives the team a strong incentive to do a good job – their reputation is at stake.

- Readers can rate the preprints, summaries and reviews. Disconnects could indicate gaming. Liaison Teams consistently rating papers higher than readers could be identified and excluded.

- The stakes of the preprint review process are lower – it’s not an accept or reject decision – so there is less incentive to game the system.

- The scientific peer review system already works on trust.

- To manage power dynamics, the process formalizes the contribution of graduate students and postdocs by having authorship on the Liaison Team (and reviews, if they choose to reveal their identity).

- To minimize implicit bias, we will provide educational materials and a structured review template.

Have you started any collaborations?

Our proposal is a collaboration between LSE and Dr. Joseph Wade, and we are discussing the proposal with biorxiv/medrxiv. Endorsement and collaboration with the NIH, NCBI and Open Access journals would increase value.

Project aims

Overview of the challenge to overcome

Peer review is central to the scientific process, but is managed primarily by journals behind closed doors, limiting its value to the community. Reviews of rejected papers, and of most published papers, are rarely posted. Peer review of preprints side-steps journal constraints and democratizes the evaluation of science by scientists. Peer review would improve preprint quality and curation but, without incentives and standards, the number and quality of reviews varies widely. Additionally, refereed preprints and their reviews are difficult to find. We propose to integrate peer review directly into preprint servers and hand control of this process back to authors and their peers.

The ideal outcome or output of the project

A system to generate consistent, valuable, discoverable, and open peer reviews. Most preprints would have reviews.

Description of the intervention

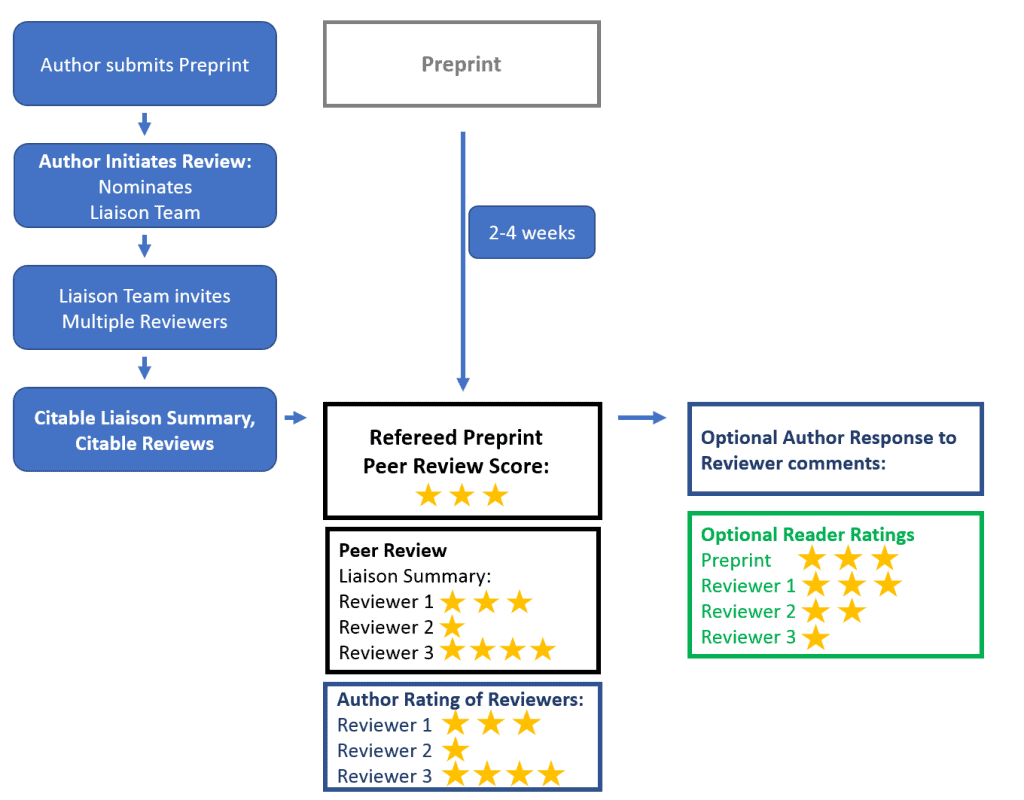

The author submits a preprint and can nominate a “Liaison team”: a lab/team headed by a PI to handle the peer review process. The team approach promotes training and diversity, and reduces biases. The server automatically sends an invitation: if it is accepted the process moves forward; if not, the author can suggest an alternative team or cancel the process.

• The Liaison team receives information on managing constructive peer review and invites multiple individuals/labs to review the preprint. The author can suggest reviewers.

• Reviewers have a unique identifier and can remain anonymous. Reviewers are searchable by reviews, scientific expertise, and peer review experience with grants, journals, and/or preprints.

• Reviewers are invited to watch videos on how to write a review (from the NIH) and on implicit bias. They receive a structured template to facilitate review, reduce bias, and improve consistency and searchability. Reviewers complete their review within two weeks and rate the preprint (1–5 stars).

• The Liaison team summarizes the reviews, gives an overall rating, links their summary and the reviews to the preprint. The reviews and summary are citable.

• Authors can rate the Liaison and the Reviews and respond.

• Refereed preprints are highlighted on the server and can be searched and sorted.

• Readers can rate the reviews (1-5 stars) and the preprint (1-5 stars; this rating is separate from the reviewer rating).

• Invitations from peers, citable outputs, and ratings create incentives.

Plan for monitoring project outcome

At baseline, 3 months, 12 months assess the number of: preprints with reviews, reviews with ratings, responses from authors, downloads and citations of preprints.

What’s needed for success

Additional technology development

Technology to monitor and sort ratings, post reviews and responses, ensure reviewer anonymity.

Feedback, beta testing, collaboration, endorsement

Survey authors, reviewers, readers, journal editors, preprint servers.

Collaborate with preprint servers, NIH.

Funding

Funding to develop training materials, algorithms, functionalities of preprint severs.

1 Comment