By Rebecca Lawrence & Vitek Tracz, F1000, rebecca.lawrence@f1000.com

We have been successfully running a service (which we call platforms, to distinguish from traditional research journals), for over 5 years at F1000 that is essentially a preprint coupled with formal, invited (i.e. not crowd-sourced) post publication peer review. We have consequently amassed significant experience of running such a system. We summarise here the approach we have taken, and share some data on our experiences, and what we have learnt along the way, in order to support the discussions within the ASAPBio community and elsewhere.

We have developed and operated this model on F1000Research since 2013, and now operate the same model as a service to a number of funders and institutions, including Wellcome, the Bill & Melinda Gates Foundation, the Irish Health Research Board, the Montreal Neurological Institute and many others. The platforms are controlled by these organisations who provide them as a service to their grantees and researchers.

We see F1000 as a service company to the research community and the organisations that represent them (funders, research institutions etc). Everything we do, as well as the experience we have accumulated, we are happy to offer as a service to anyone who thinks they can benefit from it and who shares our objective of speeding up the dissemination of new research and improving research integrity and transparency.

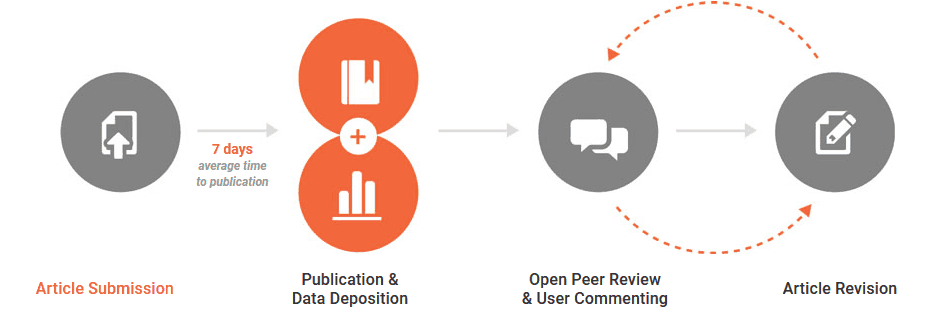

The model we have developed can be split into two core steps:

- Submission and preprint-like stage

- Post-publication peer review and indexing

Submission and preprint-like stage

Pre-publication checks. Following article submission, an internal team (largely postdocs) conduct a series of pre-publication checks. These are significantly more rigorous than those typically conducted on traditional preprint servers and more closely resemble those found in journals. They include, for example, whether it is scientific work from a scientist, whether it is plagiarised, whether it meets ethical requirements, whether it is readable and meets community standards, and whether we have source data (which we require) and it adheres to FAIR principles.

So far, we have received over 2,500 submissions on F1000Research, 75% of which passed our pre-publication checks and been published. On Wellcome Open Research (the platform we have been operating on behalf of Wellcome since Nov 2016 for their grantees), we have received almost 190 submissions, 86% of which passed our checks (rejections mostly due to lack of relevant grants).

F1000Research:

Wellcome Open Research:

Publication speed. All articles that pass the checks are published with a DOI (i.e. citable). Publication time has remained consistently fast. Across the platforms we operate, our prepublication checks take a median of 2 working days. Unlike standard preprint servers, all published articles are typeset with NLM-compliant XML and associated with detailed metadata to maximise readability, accessibility and text mining. This production stage (including author proofing) takes a median of 4-5 working days. In total, from submission to publication takes a median of 14-16 working days, which includes authors addressing any queries raised during the checks.

At this point, the article is still very much like a preprint except that, at the time of submission, the authors commit themselves to immediate formal, post publication, invited peer review. This means that unlike a preprint, the authors cannot now send this article to a journal.

Post-publication peer review & indexing

Selection of referees. Authors select referees from a list we provide of acceptable referees, or they can suggest others. The internal team check all selected referees for relevant experience and obvious conflicts of interest, and then invite the approved referees on the authors’ behalf.

Despite initial concerns, including our own, that it would be harder to recruit referees for open peer review, we have found this not to be the case. The average number of reviewers invited for articles that have ‘passed’ peer review (i.e. met the requirements for indexing in the bibliographic databases) is 10.

Refereeing process. Referees are asked to do two things: provide a status (approved; approved with reservations; not approved), and a supporting referee report. They are guided through structured forms to consider specific aspects of the article, depending on article type. The peer review questions are focussed on technical aspects of the work, including the appropriateness of study designs and methods, and the extent of support for conclusions drawn. We are also working on separate tools to assess perceived impact and importance of the findings.

The referee reports are published alongside the article and are signed with the referees’ names and affiliations. Our initial concerns that referees would be biased towards more positive responses has not materialised; we believe that the transparency means referees take the process more seriously, being candid about their views of the article and being more constructive.

Revisions and versioning. With this author-driven approach (there are no Editors making decisions), the authors decide whether, how and when they wish to respond to the referees and whether they want to revise. New versions are published and are added to the history of the article but are independently citable; referees can update their approval status with each version. The article title also updates dynamically with the details of both the version number and the referee status.

Once an article achieves two ‘Approved’ statuses from the referees, or one ‘Approved’ and two ‘Approved with Reservations’, it is indexed in the major bibliographic databases such as PubMed and Scopus, who then index all versions, past and future. The ability to formally revise an article is an important and essential part of the process. It is taken up by a significant proportion of authors (sometimes more than once), especially to address critical comments from referees.

In addition, any researcher who provides their name and affiliation can comment throughout the process. Authors decide when they wish to stop changing the article. The referees and authors can also come back at any time to raise additional issues or to provide further updates. Thus, there is no final unchangeable version of the article.

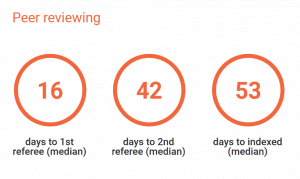

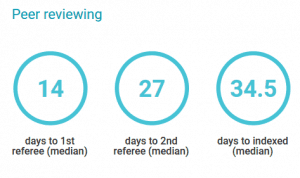

Refereeing process speed. Importantly, because the F1000 internal team manages the logistical aspects of the whole process, our peer review process is very fast. We believe this is largely due to the ability of reviewers to get credit for their work in open peer review, and the hands-on editorial support provided to authors and reviewers.

F1000Research:

Wellcome Open Research:

Credit for referees. Peer review activity is now being increasingly recognised by research institutions as an important part of assessing whether researchers are contributing to the community as a whole rather than simply to their own work. We have worked to enable referees to obtain credit for this work, through inclusion of DOIs on every report (making them citable), and enabling (and indeed encouraging) the inclusion of these reports on ORCID profiles and in Publons. In fact, according to ORCID, F1000 is the second largest source of referee reports on ORCID (9,363), behind Publons (192,612).

We have also worked to ensure that early career researchers, who often in reality do the bulk of the refereeing work, get the credit they deserve. In that regard, we encourage all our invited referees to list their co-referees on the reports. And indeed, we have seen a significant growth in uptake of this, growing from 4% to 12% of our referees on F1000Research between 2013 and 2017.

Technology and infrastructure

Whilst experimenting with the model, we have also built a solid technological infrastructure to handle the whole publishing process, which, as we’ve discovered, becomes significantly more complex once article versions are involved. We’ve also worked to maximise links with core scholarly infrastructure providers such as the major bibliographic indexers, repositories and archives, ORCID, CrossRef, data & software repositories and visualization tools, impact & analysis tools, authoring tools, reference management systems, and many more. In addition, we have built powerful tools to support authors and referees through highly efficient referee finders, referee report authoring systems, tools to enable authors to easily respond to referees and update their articles, etc.

Learning from our experiences

During the 5+ years we have now been operating this model, we have adapted and adjusted many aspects of the process as we gained real world experience of what works in practice and what doesn’t. Our experience is that author-driven peer review makes it no harder to get reviewers than in journals, and that the review process is faster and the reviews much more constructive. Having a team run the logistical operation of the peer review process on the authors’ behalf ensures active participation of referees in reviewing each published article. Transparency is also a crucial part of the approach, bringing very significant benefits to all parties: the authors, the referees and the readers.

We see our role not as publishers, but as service providers to the scholarly community. We do not wish to control the platforms but rather evolve them with the needs stipulated by the community. Our approach and associated systems are predicated on the fact that the researchers themselves are in charge of their article and make the key decisions.

Having now gained significant experience in publishing what are essentially preprints to start with, coupled with transparent post-publication peer review, and having built a fully operational technology suite to enable what is a highly complex process, we now see our role in providing the infrastructure, tools and editorial services required by different parts of the scholarly community to experiment in this space. We’re therefore keen to engage with as many different research communities as possible to support this crucial shift in control of scholarly communication from publishers and editors to the community itself.

2 Comments